Following the progressive introduction of virtualization paradigms first and cloud models later, architectures for implementing digital services have progressively evolved from monolithic to distributed and scalable frameworks, going through service-oriented architectures up to micro-services. The overall concept is the design of stand-alone service units completely decoupled and isolated from each other, designed according to the principle of “single responsibility”, which can be combined and chained through public interfaces. The loosely-coupled nature of such elementary components allows the replacement, duplication, or removal of part of them without affecting the operation of the overall architecture, hence building “service chains” that dynamically evolve at run-time with explicit control of software engineers.

Despite this evolutionary trend, most cyber-security frameworks still rely on discrete appliances conceived to run on legacy servers. Even though events and logs generated by such tools can be easily collected, aggregated and elaborated by security events and information management systems, the whole process heavily requires human intervention to set-up and configure all components, not to mention the difficulty to manage the technical and administrative heterogeneity that is present when different domains are involved.

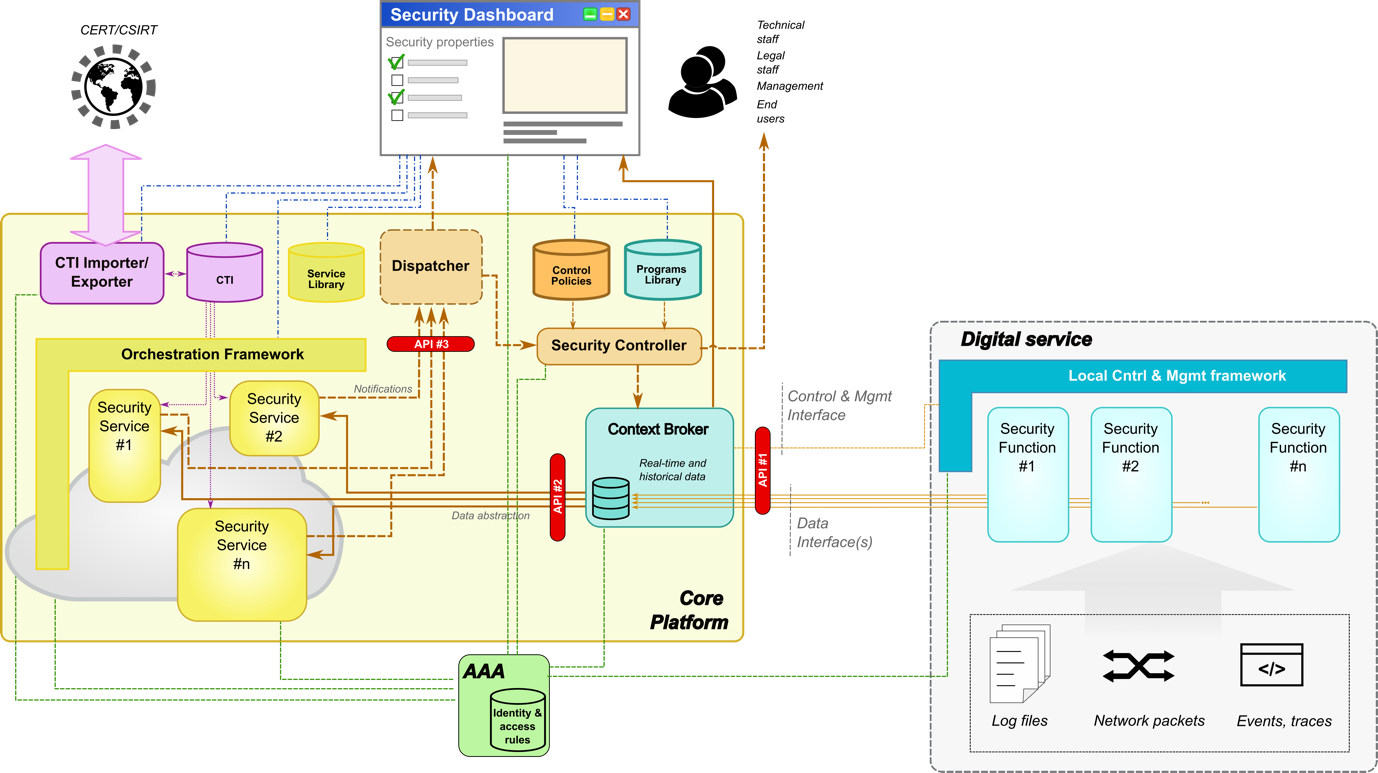

The GUARD project aims to go beyond the legacy approach and introduce new cyber-security paradigms that better fit the composable and dynamic nature of modern digital services. In this respect, the main concept is a centralized Core Platform that leverages local security functions embedded in each digital component (infrastructures, software, things) in a programmatic way. Following some general trends in the software domain, local security services may be engineered as portable “sidecars” that include the following capabilities under the control of a Local Control and Management Framework:

- Monitoring of log files and system events (including, for instance, information and measurements available from pseudo-filesystem like proc and sys in Linux);

- Inspection of network traffic at the different OSI layers, from raw packets to application messages (like HTTP, FTP, SOAP, DNS);

- Tracing of execution and control paths, which may be limited to system calls and I/O calls, or extend to memory and registry dumps;

- Enforcing of security rules at the network and application layer (for instance, by dropping packets based on IP header fields, by blocking specific HTTP request messages, by preventing access to files or peripherals).

Through Context Broker, the centralized Core Platform is expected to discover all digital services involved in a given chain, to retrieve their security capabilities, and to programmatically collect the set of data and events that are necessary to feed a set of Security Services, which implement detection processes, threat investigation, advanced analytics, vulnerability assessment, etc. A Security Controller is expected to automate response and mitigation, starting from simple rule management frameworks and paving the road to more advanced reasoning systems that leverage Artificial Intelligence. The presence of a user-friendly dashboard is necessary for the management of the whole system and to create situational awareness to end-users; in addition, a Cyber-Threat Intelligence Exported/Importer should remove the need for manual and error-prone procedures for information sharing. Additional blocks are present to keep persistence storage of data and lightweight inspection programs that can be injected in local environments.